Thought I would share some lessons learned during the last couple of years that I have been working with data pipelines. Also, taking the opportunity to reveal my understanding and hoping for some good feedback.

Building a security solution implies analysing data. But before the data can be analysed there is usually a lot of work to be done. There is something in the saying that a data scientist's job consists of x amount of data engineering and y amount of data analysing, where x is a much (much) larger number than y. The data engineering part is where the data pipeline comes into play.

Building reliable data pipelines is a challenge. The applications and frameworks involved do not have to be very difficult themselves but the system they together build up can easily become complex.

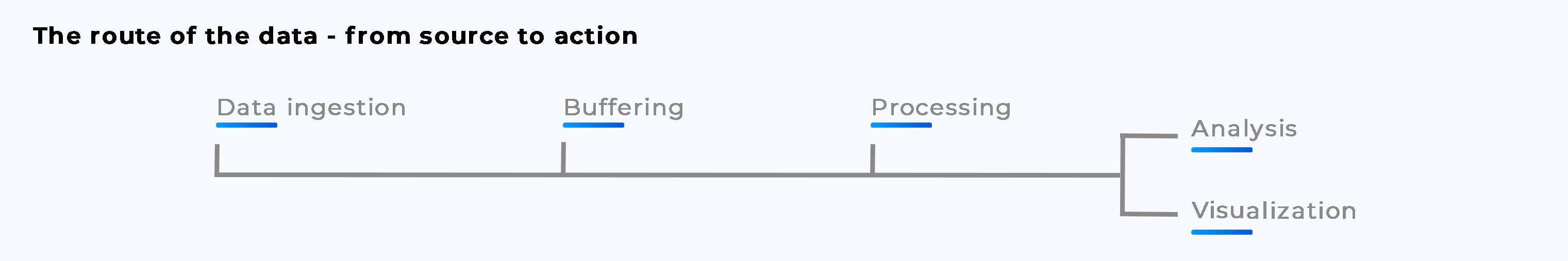

Data pipelines are for us as for many companies part of the core business. In our case, analysing data for means of mitigation as well as providing customer feedback through visualization is both key functionalities for the product we provide.

Where to start?

Let us define the scope as from the point of data collection to the data source where data is ready for consumption. The point of data ingestion is usually designed with respect to the requirements on in- and outputs, buffering, codec and protocol support. There is a data source somewhere that is generating this data. That source is left out of this discussion.

Whether a data pipeline is used for systems that are supposed to take instant actions or saving aside data for later consumption puts different requirements on buffers and speed. We leverage logstash for several of our ingestion points since it offers both in-memory buffers (speed) and persistent queue. The right amount of buffers, in the right place, is something we iterate over and over on. Buffering too little creates risk for data loss while buffering too much can cause delay. A system that needs to take actions on what is happening now needs to analyse data from the present, not the past. What has happened has already been.

Next stop after data ingestion is kafka and we use it for multiple causes such as buffering, data retention and data distribution. We try to limit the complexity of the ingestion step as much as possible, that leads us to having raw and unprocessed data in kafka. It is in the stages after the first kafka topic that we do parsing and decoding of the data and that is where information possibly gets lost. Having enough retion on the kafka topics to allow us to re-read the raw data and correct whatever mistake we might have accomplished. With data distribution, I’m referring to the use of having multiple consumer groups processing the same data in various ways, independently. Kafka does not only work as the second step in our data pipelines. It does also serve as the third for those pipelines that leverage multiple processing stages.

For processing and enriching the data we use some different applications and tools depending on the use case. Logstash is convenient when it is sufficient, otherwise run mostly golang applications for doing more customized processing.

More from Pär: A day in life for a Data Scientist

To facilitate visualization of the data, we need a sink where it can be retrieved from. We use both TimescaleDB and Elasticsearch for this purpose, making great use of TimescaleDBs continuous aggregates that really empowers it for this use case. We have recently discovered the rollups in Elasticsearch, currently in beta but it seems very promising.

Redundancy vs simplicity

Building data pipelines with redundancy is very nice in theory but it comes with a cost. Building, maintaining and deploying distributed systems creates complexity. In addition, the aspect of latency is crucial and building distributed data pipelines requires synchronization, which takes time. When speed is of importance it might be a bad decision to go the distributed way. Though, when some latency is okay and loss of data is bad for business it might just be worth investing in.

Metrics vs logs

Use metrics over logs and visualize. When troubleshooting your data pipeline there are various ways to go about it. We started early to use metrics to track the status of our applications. Now we often look into the metrics as a starting point and logs as a second step, having a better understanding of what to look for in the logs since metrics are easy to visualize and helps one to quickly diagnose applications.

Tooling

Having your infrastructure up and running is a good starting point but to get the full potential from it there is usually work to be done after that. Being able to configure and read settings is important to get the most, and the right, out of your infrastructure. We are using various scripts to configure and work with kafka, postgresql and elasticsearch. Configuring retention policies, replicas, names, indexes are some settings that usually vary across your pipeline and require tuning along the way, as experience and knowledge increase. This for sure creates more work to begin with but boy does it make us more agile.

Tools could of course be transferred into applications, creating some overhead in terms of agility but providing robustness in return with potential for adding tests etc.

Do you agree or disagree with some of these aspects? Please reach out, feedback is very much welcome!

PS

Don’t keep more data than you need, just because you can is not a valid argument. More data creates more work. If there is no business value to it, skip it.

Contact us?

Get in touch with Pär by emailing hello@baffinbaynetworks.com and type [Reply on The four stages of our Data Pipeline] in the subject line.